Identifying the Neural Substrate of Conscious Perception

Daniel Freeman, Ph.D. | MIT Lincoln Laboratory | Contact: perception@ll.mit.edu

The goal of this project is to understand the relationship between brain activity and conscious perception. This includes anything from basic sensations, like vision or pain, to affective states, such as joy or emotional distress. To do this, we employ a variety of techniques, including transcranial ultrasound, comparative neuroanatomy, and artificial intelligence. Ultimately, we strive not only to map the neural circuits responsible for conscious perception, but also to explore the physical mechanisms that give rise to conscious experience.

Transcranial Ultrasound for Neuromodulation

One of our core research techniques involves the use of transcranial ultrasound to safely and non-invasively modulate neural activity in humans through the skull. This transformative technology allows experimenters to evaluate the role of specific neural structures in conscious perception, opening up a whole range of possibilities in neuroscience research. To enable these experiments, we conduct research on novel transducer designs in order to improve the focusing ability of transcranial ultrasound.

Transcranial focused ultrasound systems with spherical (left) and phased-array (right) transducers.

Comparative Neuroanatomy

Our work in comparative neuroanatomy investigates the differences in neural structures across species to shed light on how different animals process sensory information. By comparing such variations, we aim to understand how different neural architectures evolved to support sensory processing, with a specific focus on identifying the neural activity that is required to elicit the conscious perception of some sensory stimulus (e.g., vision, hearing, tactile).

The evolution of sensory cortex across major mammalian lineages.

Figure from Krubitzer, L. A., & Prescott, T. J. (2018). The Combinatorial Creature: Cortical Phenotypes within and across Lifetimes. Trends in Neurosciences, 41(10), 744-759

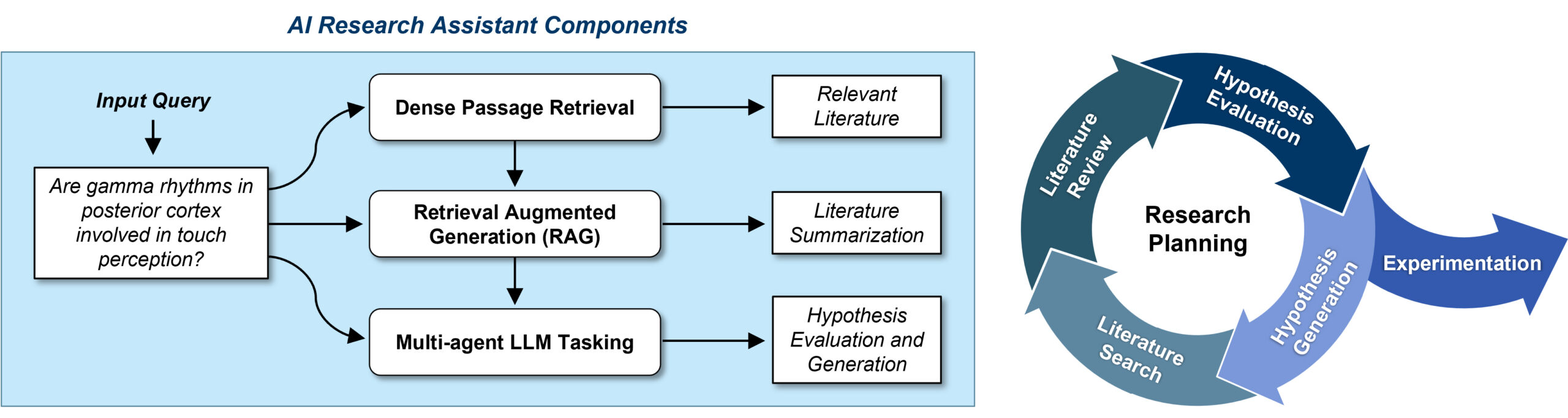

Artificial Intelligence as Research Accelerator

Research into conscious perception lies at the intersection of neurobiology and physics. To address the challenges of this interdisciplinary field, we develop and leverage collaborative AI agents, including the use of large language models (LLMs) as research assistants. These AI tools can access deep knowledge in their relevant domains, lowering communication barriers between them. This can help generate new hypotheses about the mechanisms underlying conscious perception. As these AI systems evolve, they may further bridge the gap between neuroscience and testable theories of perception, driving future experimental and theoretical breakthroughs.

Collaborators:

Seung-Schik Yoo, Ph.D.

Harvard Medical School

Brigham & Women’s Hospital

To learn more about this project, please reach out to perception@ll.mit.edu.